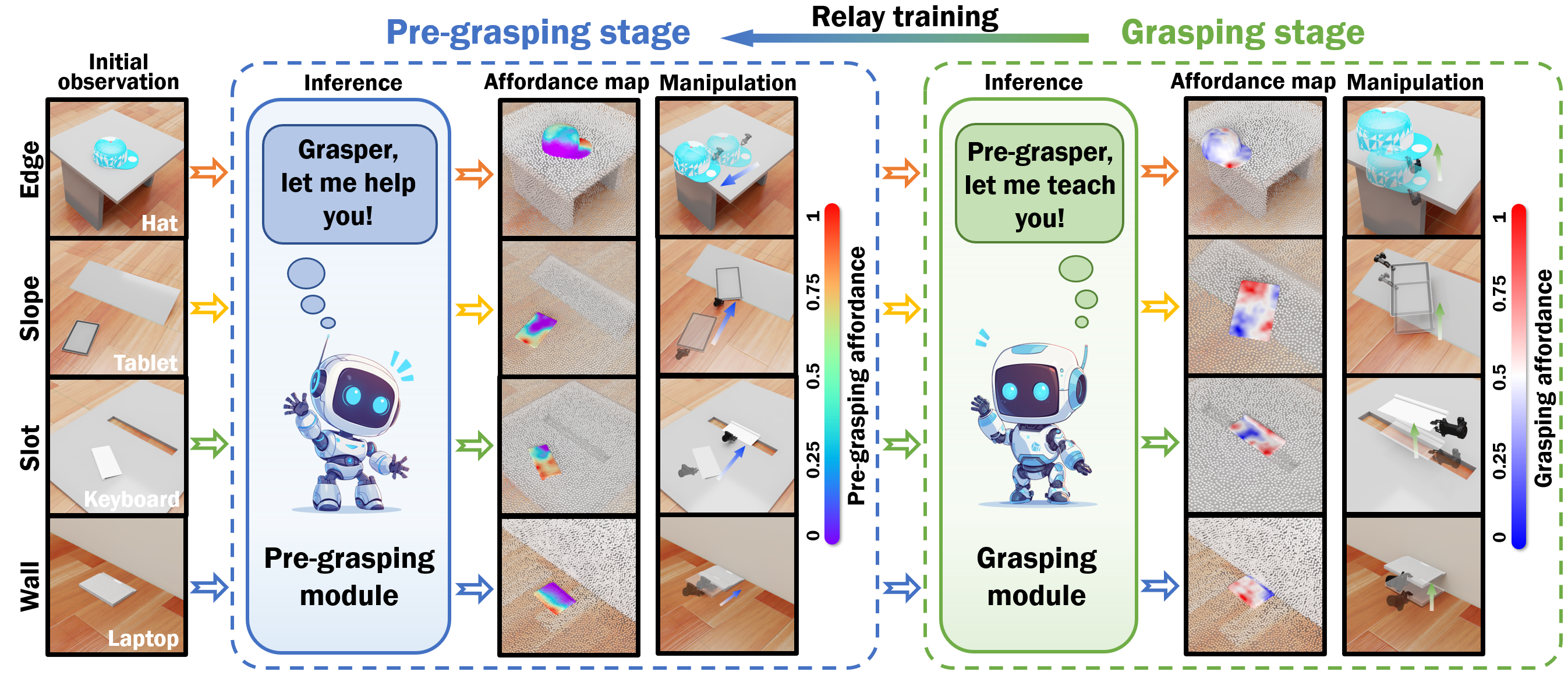

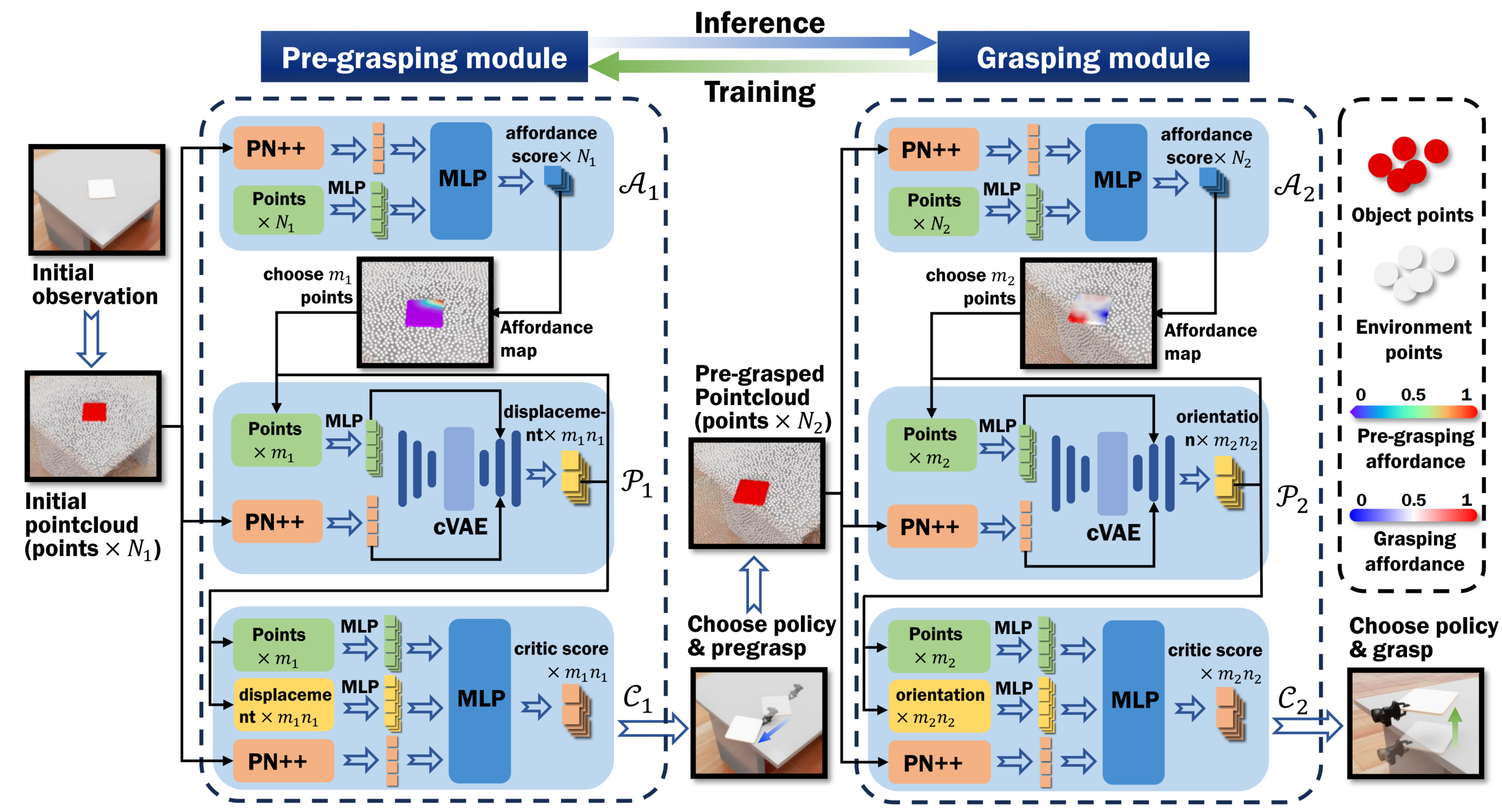

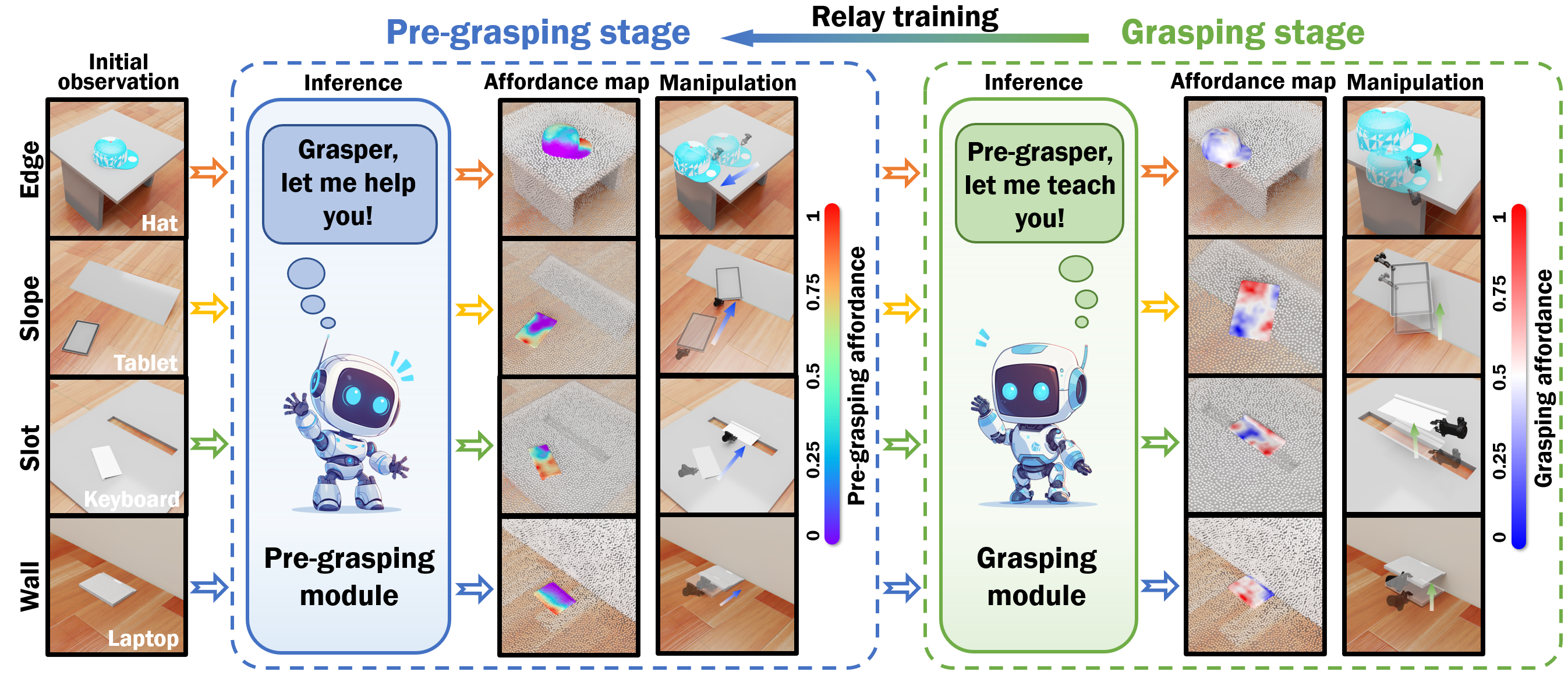

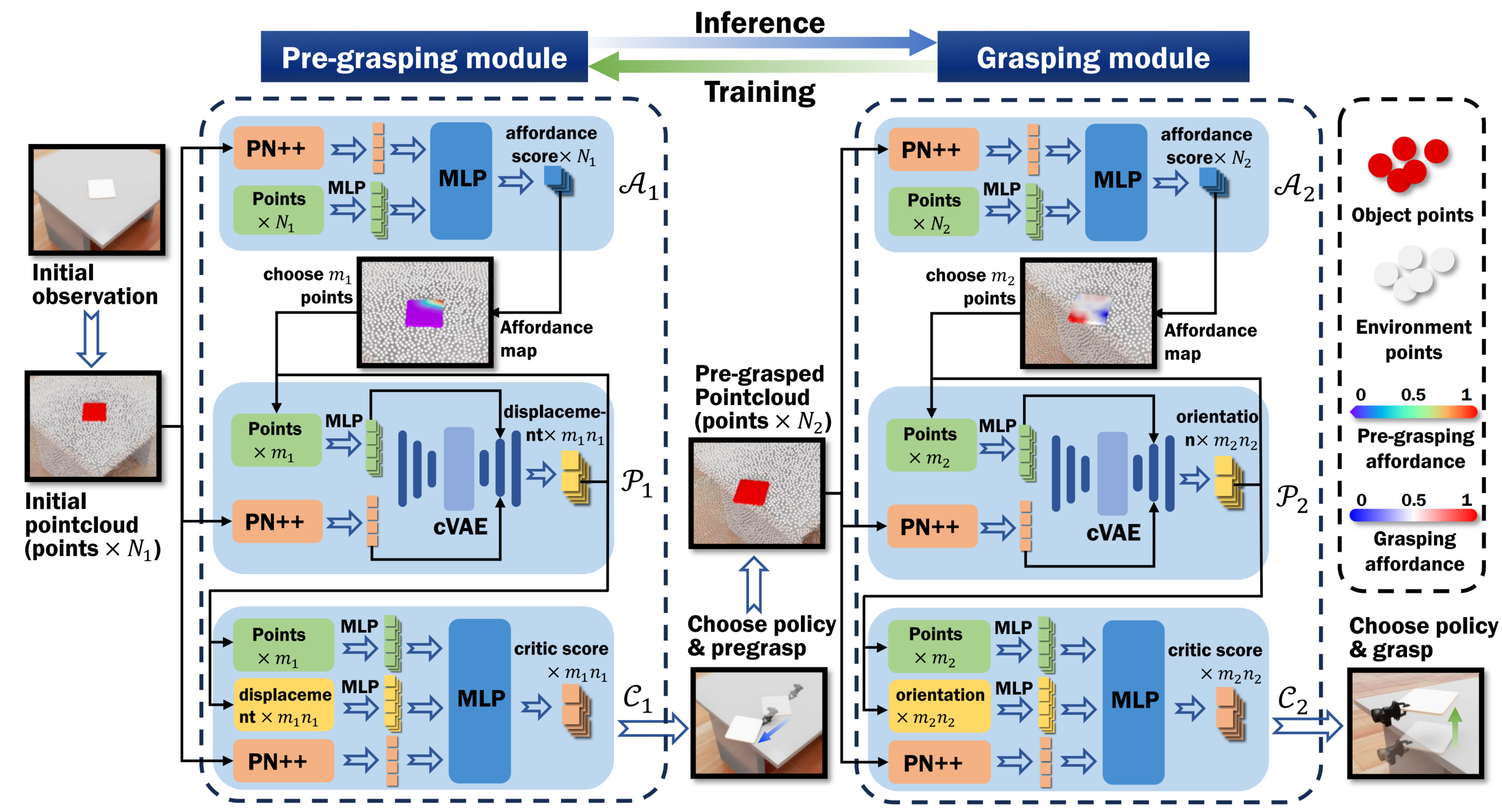

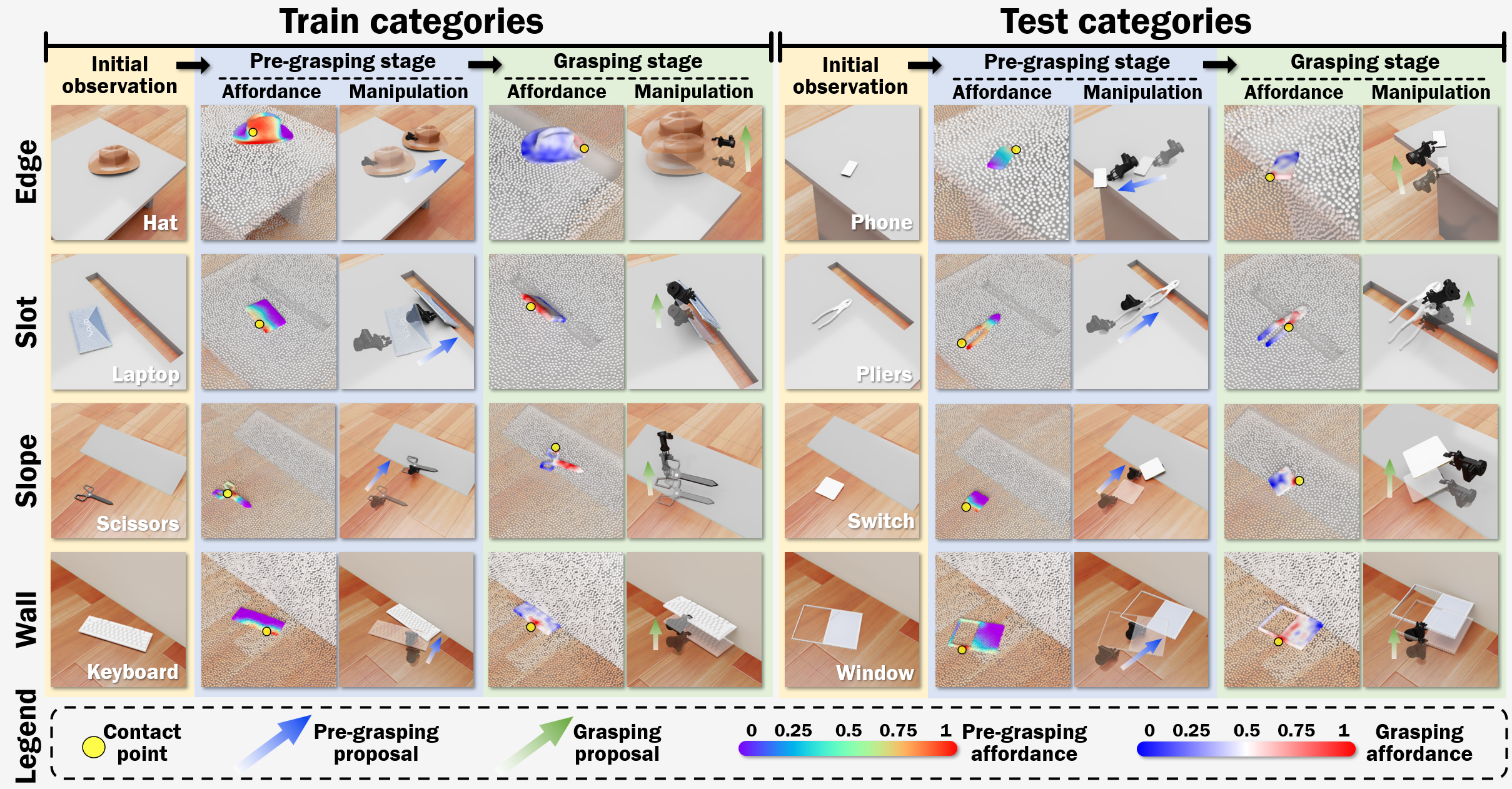

Qualitative Results. Here we demonstrate pre-grasping manipulation on training and testing categories in four scenarios—edge, slot, slope, and wall. Affordance maps highlight effective interaction areas, showing \method’s capability to devise suitable pre-grasping and grasping strategies for various object categories and scenes, including both seen and unseen objects.

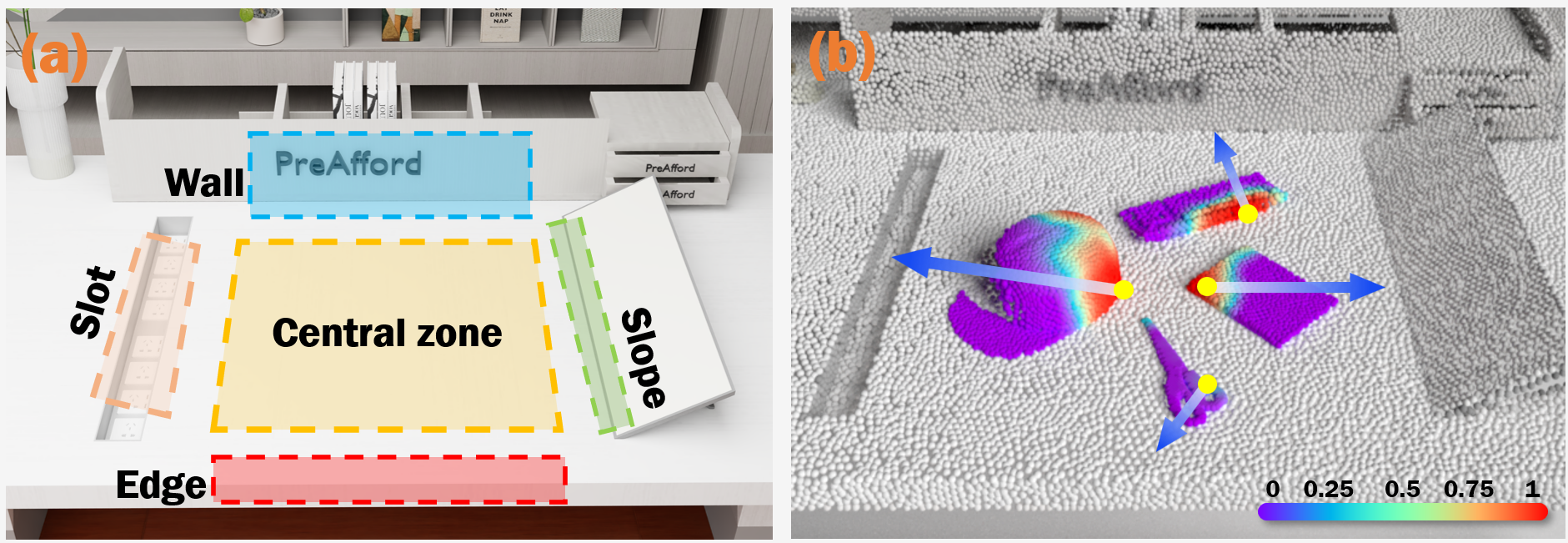

Multi-feature scenario:} PreAfford effectively addresses scenarios where multiple environmental features are present simultaneously. (a) A complex environment, (b) Affordance heatmap.

Comparison with baselines. Pre-grasping increases grasping success rates by 52.9%. A closed-loop strategy further enhances this improvement by 16.4% across all categories.

| Setting |

Train object categories |

Test object categories |

| Edge |

Wall |

Slope |

Slot |

Multi |

Avg. |

Edge |

Wall |

Slope |

Slot |

Multi |

Avg. |

| W/o pre-grasping |

2.3 |

3.8 |

4.3 |

3.4 |

4.0 |

3.6 |

6.1 |

2.3 |

2.9 |

5.7 |

6.0 |

4.6 |

| Random-direction Push |

21.6 |

10.3 |

6.4 |

16.8 |

18.1 |

14.6 |

24.9 |

17.2 |

12.1 |

18.4 |

23.0 |

19.1 |

| Center-point Push |

32.5 |

23.7 |

40.5 |

39.2 |

39.0 |

35.0 |

25.1 |

17.4 |

28.0 |

30.2 |

21.5 |

24.4 |

| Ours w/o closed-loop |

67.2 |

41.5 |

58.3 |

76.9 |

63.6 |

61.5 |

56.4 |

37.3 |

62.6 |

75.8 |

55.4 |

57.5 |

| Ours |

81.4 |

43.4 |

73.1 |

83.5 |

74.1 |

71.1 |

83.7 |

47.6 |

80.5 |

83.0 |

74.6 |

73.9 |

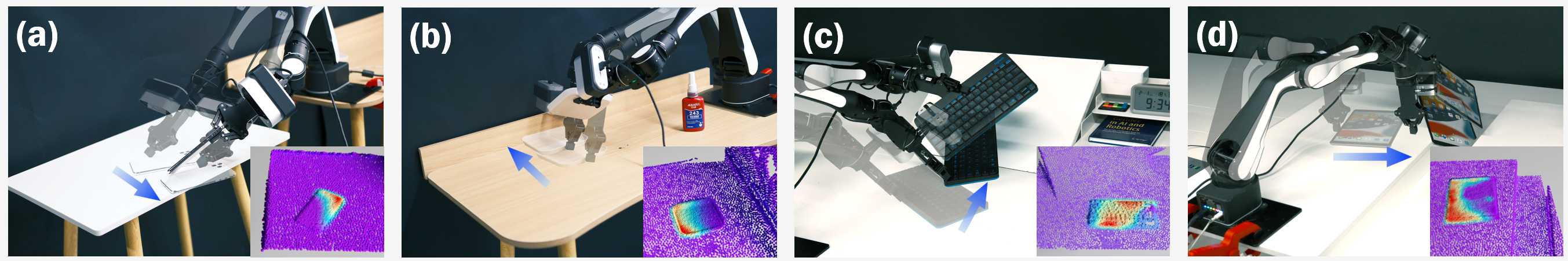

Real world pre-grasping manipulations with affordance maps. Red areas in the maps indicate optimal pushing locations. Point clouds are captured by Femto Bolt. (a) move a tablet to table edge, (b) push a plate towards a wall, (c) push a keyboard up a slope, and (d) slide a tablet into a slot.

Real-world experiment results. Experiments were conducted twice for each object in every scene, comparing direct grasping (without pre-grasping) to grasping after pre-grasping. Success rates are presented as percentages.

| Setting |

Seen categories |

Unseen categories |

| Edge |

Wall |

Slope |

Slot |

Multi |

Avg. |

Edge |

Wall |

Slope |

Slot |

Multi |

Avg. |

| W/o pre-grasping |

0 |

0 |

0 |

0 |

0 |

0 |

10 |

0 |

5 |

0 |

0 |

3 |

| With pre-grasping |

70 |

45 |

80 |

90 |

85 |

74 |

80 |

30 |

75 |

90 |

85 |

72 |